Publications

Group highlights

For a full list of publications go to Google Scholar

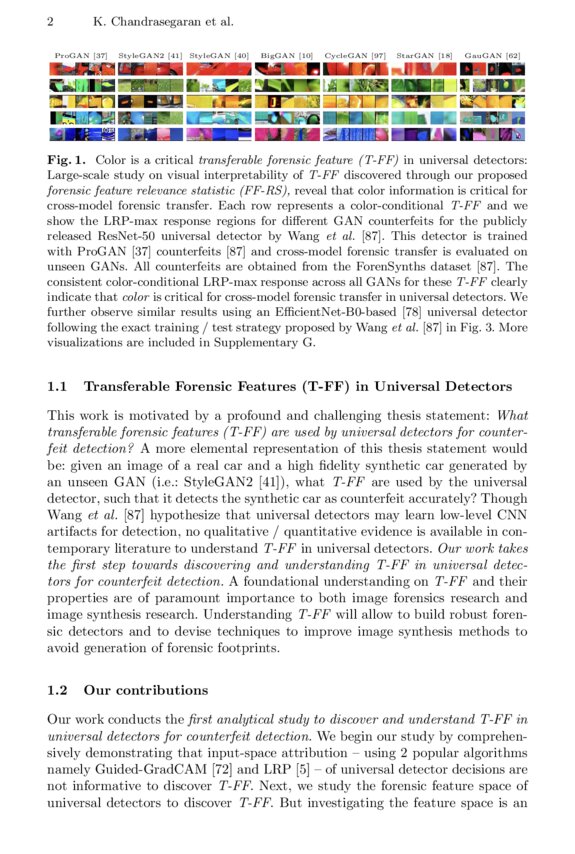

Visual counterfeits are increasingly causing an existential conundrum in mainstream media with rapid evolution in neural image synthesis methods. Though detection of such counterfeits has been a taxing problem in the image forensics community, a recent class of forensic detectors — universal detectors — are able to surprisingly spot counterfeit images regardless of generator architectures, loss functions, training datasets, and resolutions. This intriguing property suggests the possible existence of transferable forensic features (T-FF) in universal detectors. In this work, we conduct the first analytical study to discover and understand T-FF in universal detectors. Our contributions are 2-fold: 1) We propose a novel forensic feature relevance statistic (FF-RS) to quantify and discover T-FF in universal detectors and, 2) Our qualitative and quantitative investigations uncover an unexpected finding: color is a critical T-FF in universal detectors. Code and models are available at this https://keshik6.github.io/transferable-forensic-features/

Keshigeyan Chandrasegaran, Ngoc-Trung Tran, Alexander Binder, Ngai-Man Cheung

ECCV-2022 Oral. European Conference on Computer Vision

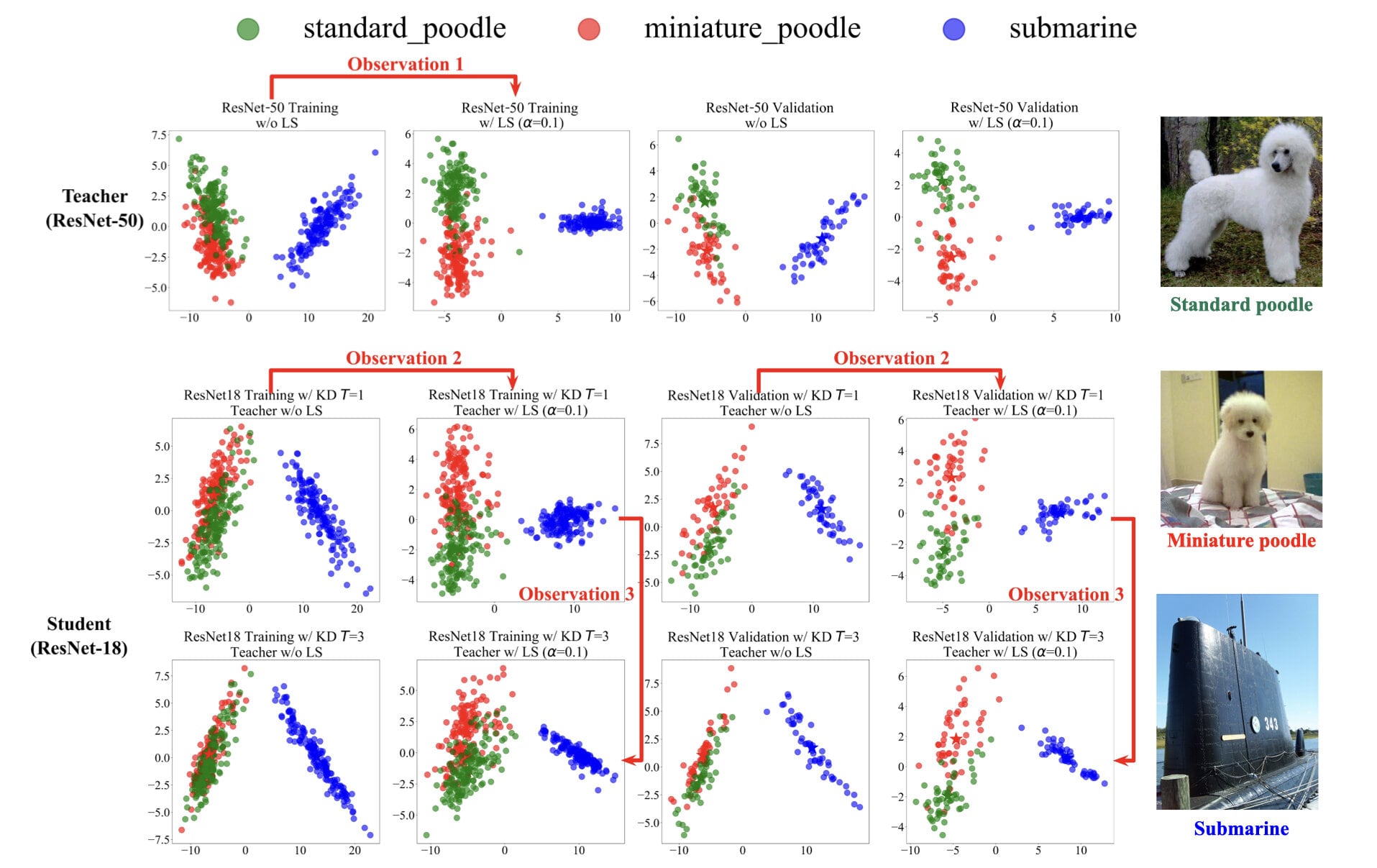

This work investigates the compatibility between label smoothing (LS) and knowledge distillation (KD). Contemporary findings addressing this thesis statement take dichotomous standpoints: Muller et al. (2019) and Shen et al. (2021b). Critically, there is no effort to understand and resolve these contradictory findings, leaving the primal question — to smooth or not to smooth a teacher network? — unanswered. The main contributions of our work are the discovery, analysis and validation of systematic diffusion as the missing concept which is instrumental in understanding and resolving these contradictory findings. This systematic diffusion essentially curtails the benefits of distilling from an LS-trained teacher, thereby rendering KD at increased temperatures ineffective. Our discovery is comprehensively supported by large-scale experiments, analyses and case studies including image classification, neural machine translation and compact student distillation tasks spanning across multiple datasets and teacher-student architectures. Based on our analysis, we suggest practitioners to use an LS-trained teacher with a low-temperature transfer to achieve high performance students. Code and models are available at https://keshik6.github.io/revisiting-ls-kd-compatibility/

Keshigeyan Chandrasegaran, Ngoc-Trung Tran, Yunqing Zhao, Ngai-Man Cheung

International Conference on Machine Learning (ICML 2022)

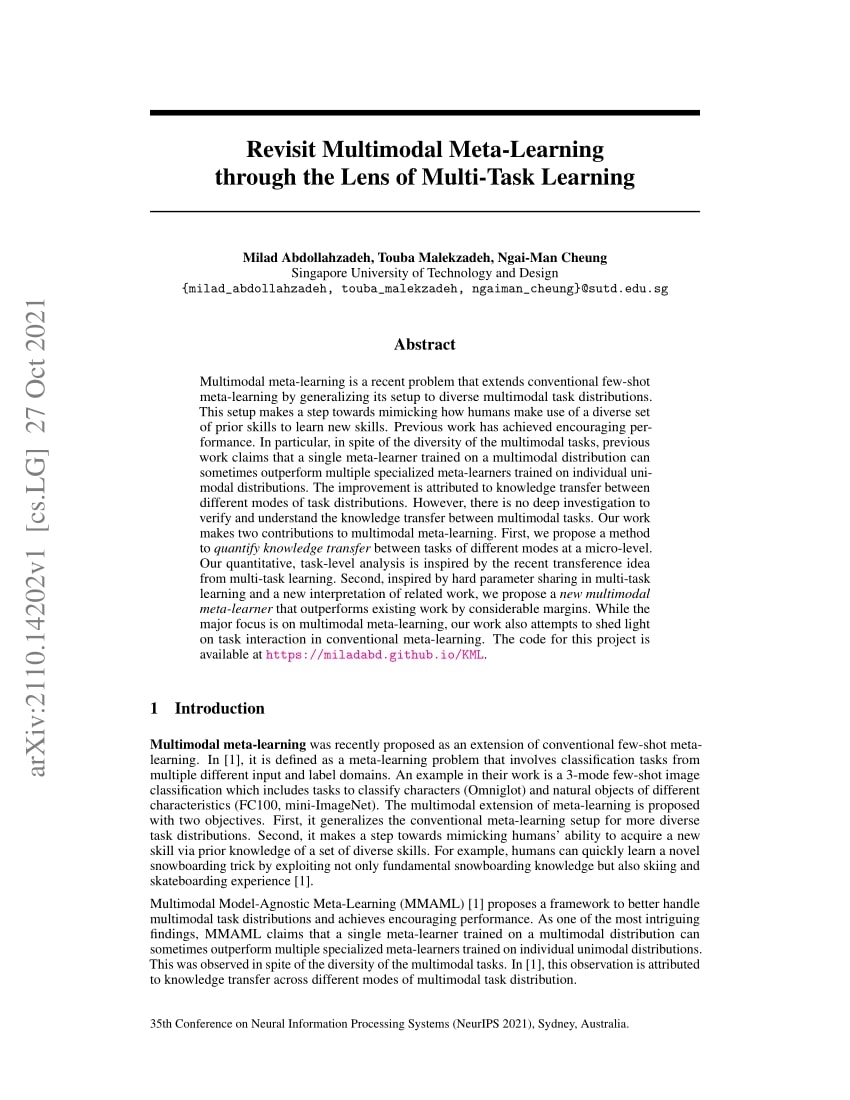

Multimodal meta-learning is a recent problem that extends conventional few-shot meta-learning by generalizing its setup to diverse multimodal task distributions. This setup makes a step towards mimicking how humans make use of a diverse set of prior skills to learn new skills. Previous work has achieved encouraging performance. In particular, in spite of the diversity of the multimodal tasks, previous work claims that a single meta-learner trained on a multimodal distribution can sometimes outperform multiple specialized meta-learners trained on individual unimodal distributions. The improvement is attributed to knowledge transfer between different modes of task distributions. However, there is no deep investigation to verify and understand the knowledge transfer between multimodal tasks. Our work makes two contributions to multimodal meta-learning. First, we propose a method to quantify knowledge transfer between tasks of different modes at a micro-level. Our quantitative, task-level analysis is inspired by the recent transference idea from multi-task learning. Second, inspired by hard parameter sharing in multi-task learning and a new interpretation of related work, we propose a new multimodal meta-learner that outperforms existing work by considerable margins. While the major focus is on multimodal meta-learning, our work also attempts to shed light on task interaction in conventional meta-learning. The code for this project is available at https://miladabd.github.io/KML

Milad Abdollahzadeh, Touba Malekzadeh, Ngai-Man Cheung

Advances in Neural Information Processing Systems 35 Proceedings (NeurIPS 2021)

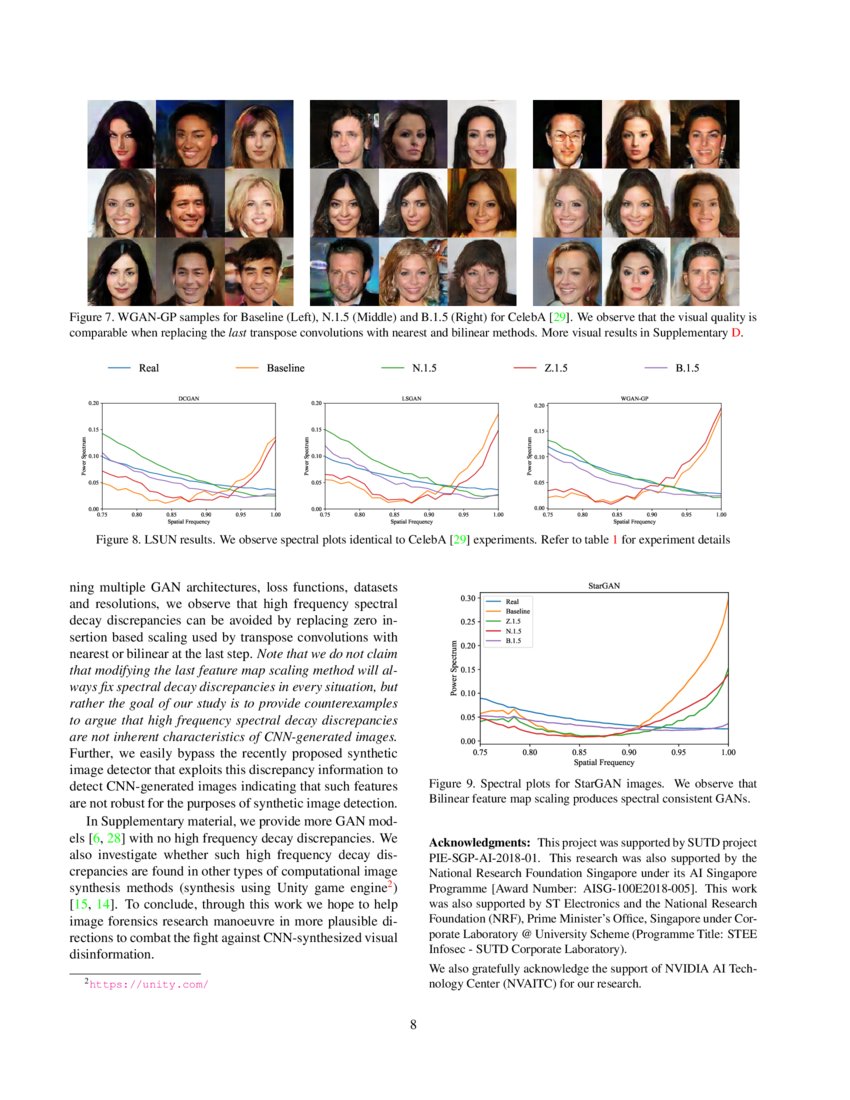

CNN-based generative modelling has evolved to produce synthetic images indistinguishable from real images in the RGB pixel space. Recent works have observed that CNN-generated images share a systematic shortcoming in replicating high frequency Fourier spectrum decay attributes. Furthermore, these works have successfully exploited this systematic shortcoming to detect CNN-generated images reporting up to near-perfect accuracy across multiple state-of-the art GAN models. In our work, we investigate the validity of assertions claiming that CNN-generated images are unable to achieve high frequency spectral decay consistency. We meticulously construct a counterexample space of high frequency spectral decay consistent CNN-generated images emerging from our handcrafted experiments where we empirically show that this frequency discrepancy can be avoided by a minor architecture change in the last upsampling operation. We subsequently use images from this counterexample space to successfully bypass the recently proposed forensics detector which leverages on high frequency Fourier spectrum decay attributes for CNN-generated image detection. Through our study, we show that high frequency Fourier spectrum decay discrepancies are not inherent characteristics for existing CNN-based generative models—contrary to the belief of some existing work—, and such features are not robust to perform synthetic image detection.

Keshigeyan Chandrasegaran, Ngoc-Trung Tran, Ngai-Man Cheung

CVPR-2021 (Oral). Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition

2023

Exploring Incompatible Knowledge Transfer in Few-shot Image Generation

Yunqing Zhao, Chao Du, Milad Abdollahzadeh, Tianyu Pang, Min Lin, Shuicheng YAN, Ngai-Man Cheung

CVPR-2023. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition

Re-thinking Model Inversion Attacks Against Deep Neural Networks

Ngoc-Bao Nguyen(*), Keshigeyan Chandrasegaran(*), Milad Abdollahzadeh, Ngai-Man Cheung. (*) denotes Equal Contribution

CVPR-2023. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition

Fair Generative Models via Transfer Learning

Christopher TH Teo, M Abdollahzadeh, Ngai-Man Cheung.

AAAI-2023.

2022

Few-shot Image Generation via Adaptation-Aware Kernel Modulation

Yunqing Zhao(*), Keshigeyan Chandrasegaran(*), Milad Abdollahzadeh(*), Ngai-Man Cheung. (*) denotes Equal Contribution

NeurIPS-2022. Advances in Neural Information Processing Systems 36 (NeurIPS).

Discovering Transferable Forensic Features for CNN-generated Images Detection

Keshigeyan Chandrasegaran, Ngoc-Trung Tran, Alexander Binder, Ngai-Man Cheung

ECCV-2022 Oral. European Conference on Computer Vision

Revisiting Label Smoothing and Knowledge Distillation Compatibility: What was Missing?

Keshigeyan Chandrasegaran, Ngoc-Trung Tran, Yunqing Zhao, Ngai-Man Cheung

International Conference on Machine Learning (ICML 2022)

A Closer Look at Few-shot Image Generation

Yunqing Zhao, Henghui Ding, Houjing Huang, Ngai-Man Cheung

CVPR-2022. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition

Graph-wise Common Latent Factor Extraction for Unsupervised Graph Representation Learning

Thilini Cooray, Ngai-Man Cheung

AAAI-2022 (Oral)

2021

Revisit Multimodal Meta-Learning through the Lens of Multi-Task Learning

Milad Abdollahzadeh, Touba Malekzadeh, Ngai-Man Cheung

Advances in Neural Information Processing Systems 35 Proceedings (NeurIPS 2021)

Shell Theory: A Statistical Model of Reality

Daniel Lin, Siying Liu, Hongdong Li, Ngai-Man Cheung, Changhao Ren, Yasuyuki Matsushita

IEEE Transactions on Pattern Analysis and Machine Intelligence

Joint estimation of low-rank components and connectivity graph in high-dimensional graph signals: Application to brain imaging

Rui Liu, Ngai-Man Cheung

Signal Processing

A Closer Look at Fourier Spectrum Discrepancies for CNN-generated Images Detection

Keshigeyan Chandrasegaran, Ngoc-Trung Tran, Ngai-Man Cheung

CVPR-2021 (Oral). Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition

On Data Augmentation for GAN training

Ngoc-Trung Tran, Viet-Hung Tran, Ngoc-Bao Nguyen, Trung-Kien Nguyen, Ngai-Man Cheung

IEEE Transactions on Image Processing

2020

Direct Quantization for Training Highly Accurate Low Bit-width Deep Neural Networks

Tuan Hoang, Thanh-Toan Do, Tam V Nguyen, Ngai-Man Cheung

IJCAI-2020. International Joint Conference on Artificial Intelligence

Unsupervised Deep Cross-modality Spectral Hashing

Tuan Hoang, Thanh-Toan Do, Tam V Nguyen, Ngai-Man Cheung

IEEE Transactions on Image Processing

Explanation-guided training for cross-domain few-shot classification

Jiamei Sun, Sebastian Lapuschkin, Wojciech Samek, Yunqing Zhao, Ngai-Man Cheung, Alexander Binder

ICPR-2020. International Conference on Pattern Recognition

InfoMax-GAN: Improved Adversarial Image Generation via Information Maximization and Contrastive Learning

Kwot Sin Lee, Ngoc-Trung Tran, Ngai-Man Cheung

WACV-2021 (Oral). IEEE Winter Conference on Applications of Computer Vision

TEAGS: time-aware text embedding approach to generate subgraphs

Saeid Hosseini, Saeed Najafipour, Ngai-Man Cheung, Hongzhi Yin, Mohammad Reza Kangavari, Xiaofang Zhou

Data Mining and Knowledge Discovery

Simultaneous compression and quantization: A joint approach for efficient unsupervised hashing

Tuan Hoang, Thanh-Toan Do, Huu Le, Dang-Khoa Le-Tan, Ngai-Man Cheung

Computer Vision and Image Understanding

Attentive Weights Generation for Few Shot Learning via Information Maximization

Yiluan Guo, Ngai-Man Cheung

CVPR-2020. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition

Attention-Based Context Aware Reasoning for Situation Recognition

Thilini Cooray, Ngai-Man Cheung, Wei Lu

CVPR-2020. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition